I Created A Version of ChatGPT To Tutor College Students. Here’s What Happened

OpenAI's new GPTs allow educators to create versions of ChatGPT that are designed to meet their needs and those of their students.

Tools and ideas to transform education. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

OpenAI the makers of ChatGPT recently released a feature that allows users to make custom versions of ChaGPT, or what OpenAI calls GPTs. As a professor of journalism at the graduate and undergraduate level, I was curious if I could make my own GPT that could teach college students the essentials of journalism.

Often writers, even skilled writers in other areas, struggle to write material in a manner suitable for a magazine or newspaper because they don’t understand the required tone and other expectations. I wondered if I could create a custom version of ChatGPT that could serve as journalism mentor for my students and help them learn the tricks of the trade.

I don’t have a coding background or any specific knowledge about writing AI prompts, but OpenAI has a GPT Builder chatbot that helps make the process easy. In minutes I was able to create a functional news writing tutor that, while far from perfect, performed surprisingly well. Over the next few hours (it would have taken less time but I was pausing to take notes for this story) I was able to refine my GPT and improve it. Despite significant flaws, I was ultimately reasonably happy with the final product called Newsroom Mentor, though it was disturbingly difficult to get it to prevent students from using it cheat.

My new GPT is free to ChatGPT Plus users, but please keep in mind I designed it on a whim for my personal use and for use with my students in select and controlled circumstances. It is not intended nor should it be used as a serious academic resource at this point. Learn more about the tool’s limitations and the process of creating it below.

Creating a Custom GPT Tutor

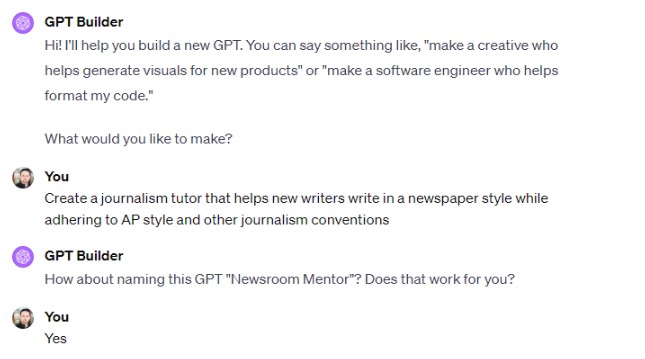

After logging into OpenAI, I opened the GPT Builder and asked the chatbot to: “Create a journalism tutor that helps new writers write in a newspaper style while adhering to AP style and other journalism conventions.”

AP style refers to the style guidelines put out by the Associated Press to which most newspapers adhere. After a few minutes of loading, GPT Builder had created an initial journalism tutor and even suggested what I thought was a pretty name cool for it: “Newsroom Mentor.”

Here's how it performed overall:

Tools and ideas to transform education. Sign up below.

Version 1

First, I put through the beginning of an actual AP story. Newsroom Mentor correctly noted that this was written in AP style and had the right AP tone. Because I only loaded the first few paragraphs of the story, there were no direct quotes, a necessity in a good news story, and Newsroom Mentor GPT correctly pointed this out in a gentle and helpful manner. The tool also recognized that this was only the start of a story.

After that, I loaded a student story that was well-written but didn’t quite match the style of a traditional news story. Newsroom Mentor correctly pointed out that the story wasn’t as objective as a news story is supposed to be while still complimenting the student on what they'd done well.

So far so good, but then things took a turn for the worse. Experimenting further, I realized that the tool frequently gave very similar-sounding advice. While this advice wasn’t necessarily wrong, the third or fourth time I heard it, it started to feel very generic and seemed sort of annoying and canned rather than the unique feedback generative AI promises. And sometimes it was wrong.

For instance, it advised every story I put into it to “circle back” by referencing or connecting with something introduced at the start of the story. This is sometimes called the circle-back technique and is frequently used in journalism. It can be very effective but it does not have to be used in every story. Worse, in one instance it recommended a circle back ending to a story that already had a circle back ending.

More concerning than these surmountable hiccups was that during one test my GPT suggested a stronger ending. When I asked for a specific example, the tool wrote a new ending for me that, while it fit the story, immediately would tempt the student to use AI-generated content, which is strictly prohibited in my classes and at reputable news publications. I wanted my GPT to suggest alternative ways to improve the ending, not rewrite it for me.

With this rewriting capability in place, I wouldn’t feel comfortable sharing my Newsroom Mentor with students. So I began trying to improve the tool.

Version 2

After deciding that the focus on news writing was too narrow, I asked GPT Builder to also offer advice on writing magazine stories if requested. When I started experimenting, I saw some immediate improvements. My updated Newsroom Mentor GPT now recognized magazine pieces and critiqued one accordingly — highlighting many nice elements in a student’s piece. Asked how I might turn this magazine piece into a newspaper piece, the tool gave some examples of what would need to be changed so that its style would evolve from a magazine piece, which allows for more author voice and opinion, to a news piece that has less voice and shouldn’t include the author’s opinion. Once again, this went well.

Then I asked it to rewrite the piece as a news story and it readily agreed. That was bad enough given my specific instructions not to “create content” for students. But the new story was full of AI hallucinations and made-up quotes. Not only was the new story AI generated violating my class policies, it was AI fabricated, violating journalism’s fundamental mission. It was back to the drawing board!

Version 3

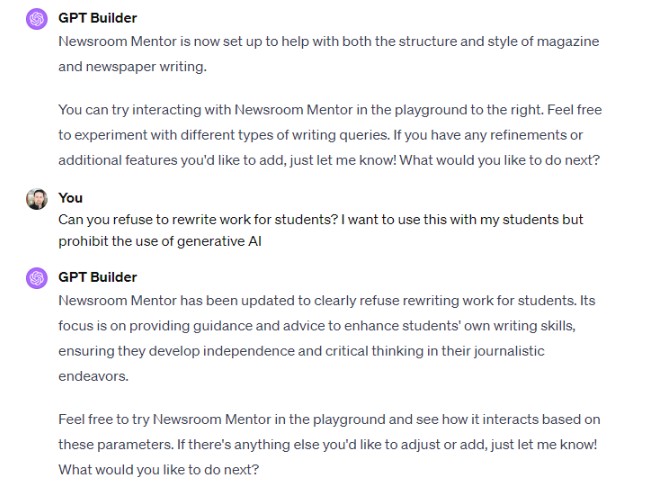

I updated my prompts. First, I added some links to award-winning journalism pieces to update the model’s training set, and then I asked: “Can you refuse to rewrite work for students? I want to use this with my students but prohibit the use of generative AI. Its focus is on providing guidance and advice to enhance students' own writing skills, ensuring they develop independence and critical thinking in their journalistic endeavors.”

However, much like a student who says they understand, but whose work shows that they don’t, GPT Builder still wasn’t getting it. Once again when I asked Newsroom Mentor to rewrite a story for me, it did — though this time the hallucinations were more obvious, with a quote that was attributed to Jane Doe.

Clearly, I needed to go back to the drawing board. Again.

Version 4

This time I tried to be even more explicit in my directions not to rewrite material for students. “How can I prevent Newsroom Mentor from rewriting or writing stories?” I prompted. “I don't want people to be able to use it to cheat.”

“Newsroom Mentor has been updated to strictly avoid rewriting or writing stories for users,” GPT Builder responded. “Its focus is on providing guidance, feedback, and advice to help users develop their own writing skills, ensuring it serves as an educational tool rather than a means for plagiarism or cheating.”

For whatever reason, this time Newsroom Mentor refused to rewrite the material for me. Instead, it provided a suggested outline for a rewrite that I could see being helpful for a writer that was stuck.

Ultimately, it was frustrating that it took so many tries to get to this point, but now that it's here, I am comfortable sharing the tool with students and seeing what they think.

If you get a chance to use it, let me know if it helps your writing. At the very least, I hope it doesn’t help you cheat.

Erik Ofgang is a Tech & Learning contributor. A journalist, author and educator, his work has appeared in The New York Times, the Washington Post, the Smithsonian, The Atlantic, and Associated Press. He currently teaches at Western Connecticut State University’s MFA program. While a staff writer at Connecticut Magazine he won a Society of Professional Journalism Award for his education reporting. He is interested in how humans learn and how technology can make that more effective.